An Interview with Artist Adriana Ramic

Art — 28.04.17

Words by Sunny Lee

With her first solo exhibition Machine that the larvae of configuration, artist Adriana Ramić will inhabit Kimberly Klark Gallery to host its first earthwork, among other pieces. Read on to learn more about what compelled her to insect communication in the first place, and why she considers herself a technological pessimist.

Can you tell me about how this show came together?

For the past few years, I have been working with computer vision and machine learning, out of an interest in the relationships of interpretation and replication that occur. In the past this took the form of an optical character recognition program based on the morphologies of C. Elegans, recurrent neural networks generating texts from a Croatian hedgehog caretaker’s blog and Dostoyevsky’s The Idiot, and most recently, basing an exhibition off of the output from a recurrent neural network trained on the digital archive of Witte de With Center for Contemporary Art. While working on these projects, I started getting to know about an elementary school in Serbia directed by a friend’s mother, Osnovna Škola “Đura Jakšić.” I became captivated by his reports of the school, which had beautiful paintings of snails, ladybugs reading books, and other creatures on the wall. This past summer, I was able to visit it to start a project looking at the school through an optical character recognition system trained on ladybugs. Two photographs that I took there are used as source material for the show.

Installation view, Rome Was Built For A Day, curated by Marie Egger, at Witte de With Center for Contemporary Art. Photo Aad Hoogendoorn.

For those unfamiliar with your work, can you explain why insect pathways have been imposed as a kind of framework for the way we communicate? Also, how did you get to this revelation?

I’ve been interested in the way we look at animals or natural phenomena to understand the structures and logics of biological intelligence. Insect pathways, as evidence of communications and coordinations within a group, are one example that works as a shorthand for representing and discussing the machinations of complex systems.

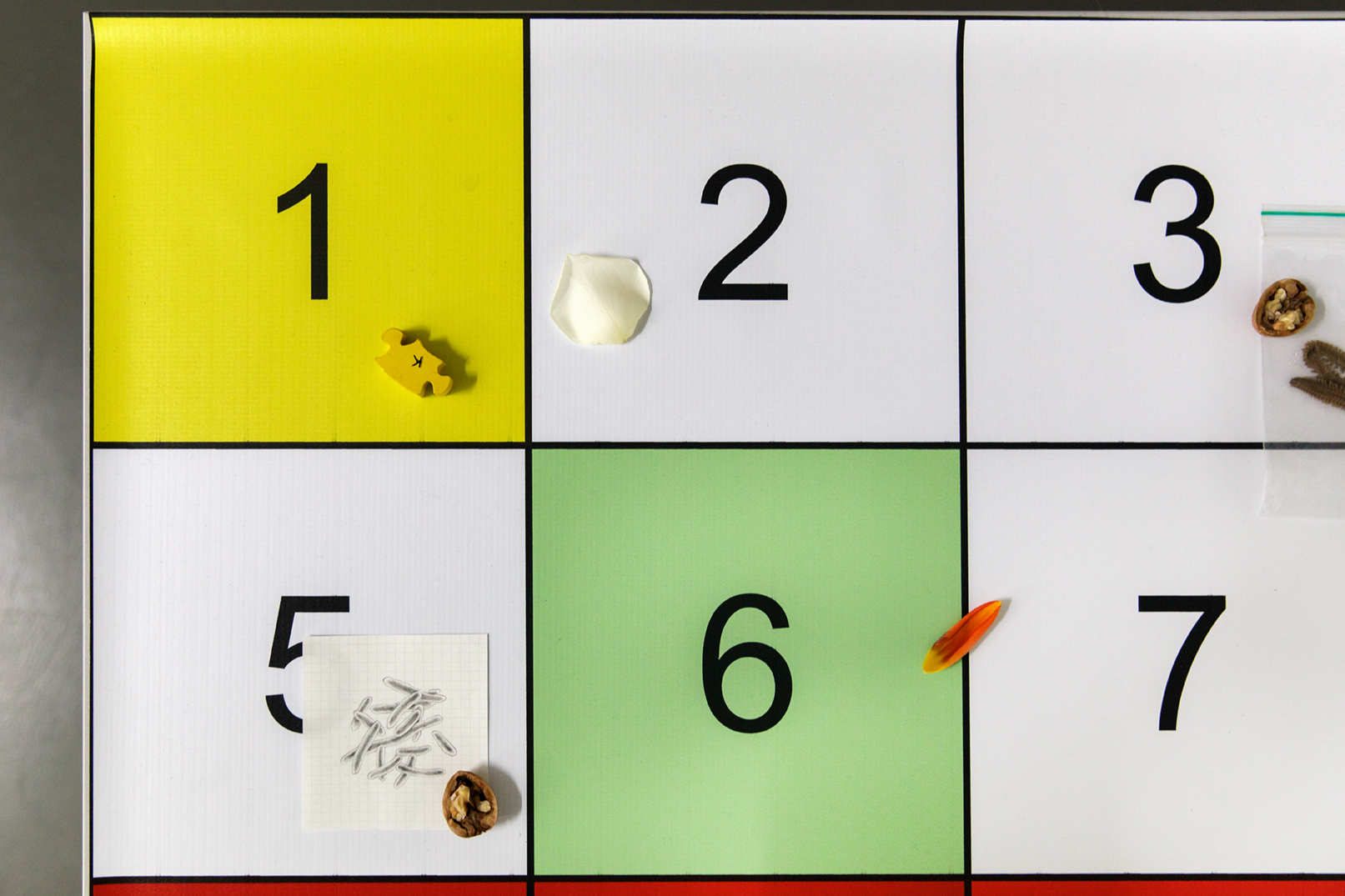

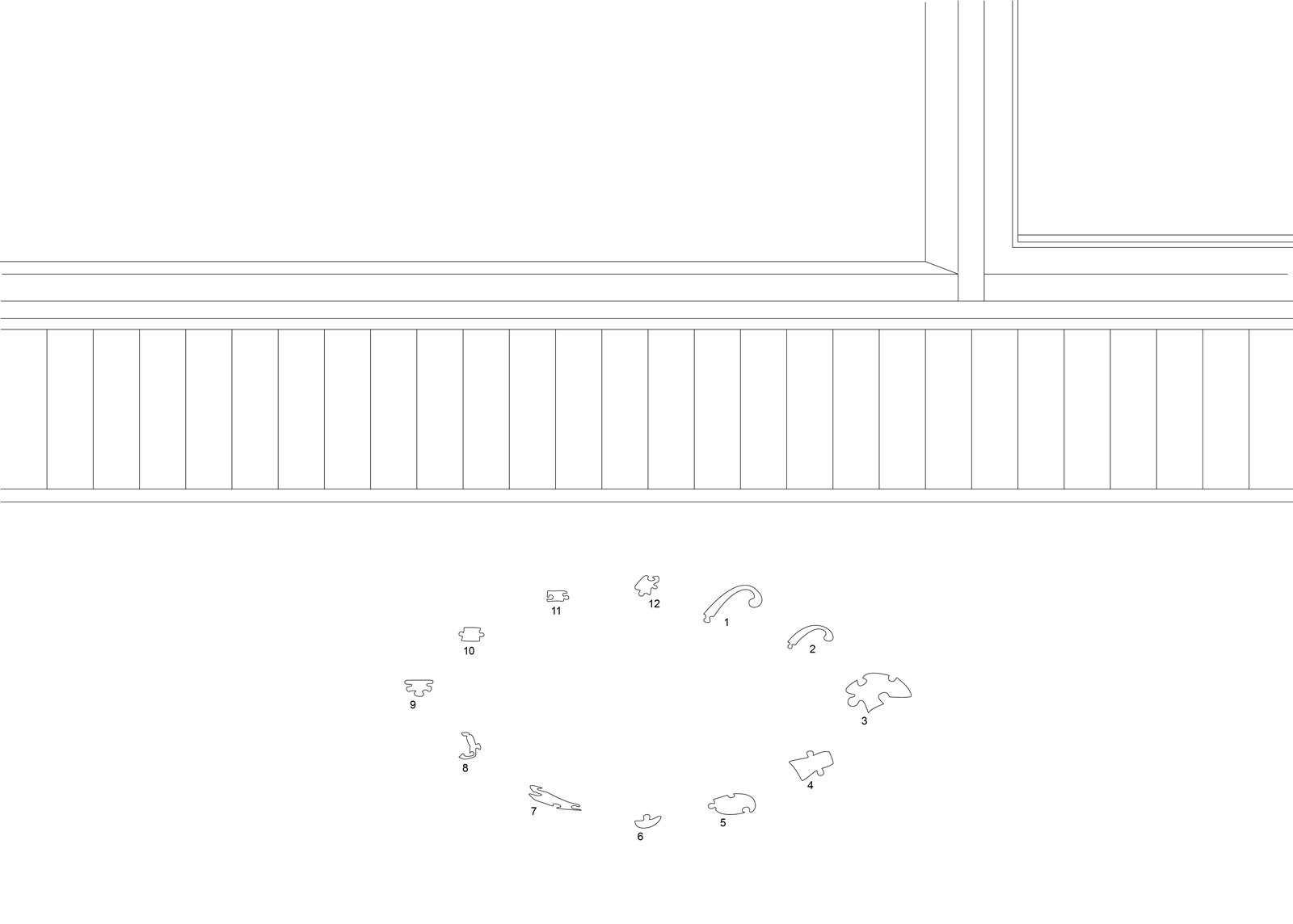

Setting for a wasp to rewrite the passage of time (Hours 8, 7, 11, 12, 1, 3, 6), 2016. Pieces 1–12 of wooden sleeping snail, open window.

You’ve been experimenting with insect pathways and mode of communication for a while now, but your recent exhibition focuses mainly on the ladybug. What is it exactly about the lady bug? Can you tell me a bit about that?

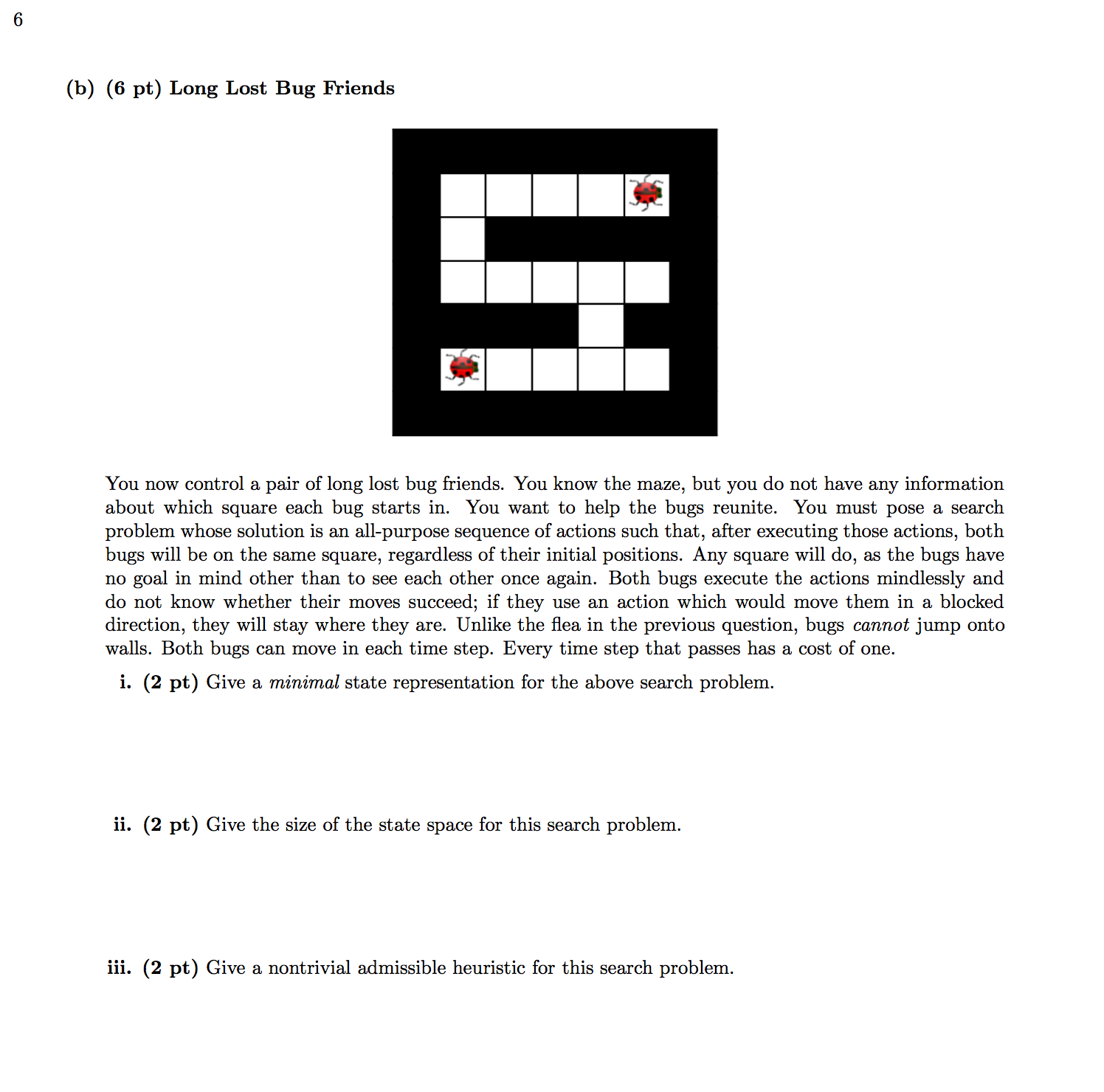

I came across a problem in a midterm exam for an “Introduction to Artificial Intelligence” course taught at UC Berkeley presenting two ladybugs at either end of a maze, asking the student to pose a search problem whose solution results in both ladybugs arriving at the same square. Finding this problem was a key that unlocked a link between general research in ladybugs and other questions about AI.

UC Berkeley CS188 Intro to AI, Spring 2012 midterm 1 p.6

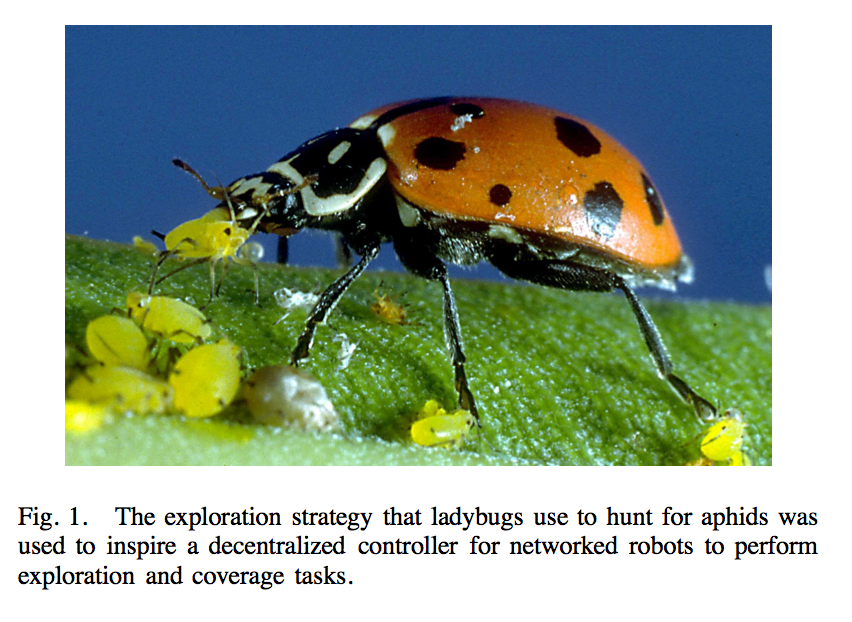

Ladybugs are seen widely as divinatory figures, and are also cannibalistic; particularly the larvae. Something strange seems to happen when linking those characteristics with the question of composing an artificial intelligence — though perhaps no stranger than with the realities of other earthly organisms.

Schwager, Mac, Francesco Bullo, David Skelly and Daniela Rus. “A ladybug exploration strategy for distributed adaptive coverage control.” 2008 IEEE International Conference on Robotics and Automation (2008).

first the fly violence of the world, 2016, Image and caption pair selected from computer vision training data set http://n.C.Inaintindingen.bl.lyphenine.com

Can you give a brief background on the use of language-producing algorithms in The Return Trip is Never the Same? How has it informed/different from the ladybug text recognition system? Can you explain the processes you created for it?

The Return Trip is Never the Same was a bit different as I didn’t write a program, but automated my drawn interpretation of illustrations in a book into texts on a keyboard across many different languages. That emerged as a string of words generated by Swype’s predictive text system and as a translation generated by Google. A nice aspect of working with developing technologies is the ability to capture a moment in time — like taking a cerebral photograph of it. When I made that piece, Google was still using statistical machine translation for their Translate tool. Now as of 2016 they’ve switched to using artificial neural networks for strikingly more natural-seeming translations. Each time a version of The Return Trip is Never the Same was exhibited, I’d recreate all the translations, wanting to hold on to the moments in time before Google Translate will be able to pass a Turing test with ease. Connecting the both of them was an interest in how one seemingly unlike vector (an ant path, a ladybug photo) can determine another vector of information when putting into contact, whether through predictive typing and Google translate or a computer vision program.

Installation view of книголюб жасау көкөніске ғалымның / Bibliophiles vegetables scientist / Légumes bibliophiles scientifique and Enngwe thabetse transfrontier r thawing / Transfrontier enjoyed another mountain r / Transfrontalière a connu une autre montagne r after The Return Trip is Never the Same, Plowing Solids curated by Rasmus Myrup, New Galerie at Yves Klein Archives. Photo Aurelien Molé.

I also like that you force the ladybug code to choose an image whether or not it has any semblance to the ladybug image. Goes to show how malleable tech can be…

Yeah, like you can edit the criteria for certainty there; similar to Norbert Weiner’s definition of information: “a single decision between equally probable alternatives.” Things that are 0.0001% correct can be passed as “the correct answer” if nothing else comes closer, without any indication of their accuracy.

One thing that stuck with me is that you take these really sophisticated learning systems and use them to deconstruct and perhaps classify (in its own right) in crude, rudimentary ways. What is the impulse behind that?

Using a particular learning system, however sophisticated, comes with its own set of specific parameters and restraints. Their goal is, more often than not, to process something in such a way that its output is superiorly indistinguishable from what a human might interpret. However, the lapses of the system’s disguise reveal the mechanism behind this attempt at reproducing cognitive behaviour. I’m interested in pushing this to a cruder extreme, where the outputs are exceedingly simplified to the point where they fall into a different world presenting different simultaneous meanings that could not be reached otherwise.

Speaking of which, what about the notion of classification is so fascinating to you?

I’ve been studying the languages of my family (Bosnian-Croatian-Serbian-Montenegrin and Polish) for the past years, which for various circumstances I wasn’t able to learn growing up. Classification, its criteria’s, and its effects are on my mind daily flipping through flashcards on different terminologies. Another point of research has been in datasets for machine learning, particularly the choice to represent only certain classes or categories, which draws a clear distinction between exclusion and priority, with particularly troubling fallout due to insidious prejudices threaded into these data selections. Also floating in that atmosphere is the larger ambition of mega-corporations to index and catalogue as much as possible as a means of exercising intelligent dominion. Unlike the vast datasets purporting to be of universal utility, there’s also a potential in structuring one’s own dataset or re-configuring the means with which existing materials can be read. The encyclopaedic ambition of Fischli & Weiss’ Suddenly this Overview begun in 1981 feels resonant with this idea, for example — an encyclopaedia different in its specificities. Because it’s so much work to create one, there isn’t a wide range. what is interesting and strange about working with sets of data and machine learning is how much control you end up having to shape an alternative universe. Charles Gaines’ works are also incredible examinations of the idea of classification and semantics. His 1978–1979 series Faces still feels startlingly prescient in this age of facial recognition.

Tell me about your awesome collection of flash cards!

The ladybug optical character recognition program for Machine that the larvae of configuration reads a given input image and translates it into a string of characters — in this case, they’re usually around 943 characters long. These characters are then dispersed throughout the space as a method to encode an image at a large scale. Each flashcard has an image of something that starts with the letter, along with the letter itself. These have been sourced from Stanford’s Imagenet, a large research effort to compile a database of images used for computer vision credited with kickstarting machine learning, supplemented with further images scraped from the Google image results for every word in a dictionary of Bosnian-Croatian-Serbian-Montenegrin. It’s an exercise in how information, in this case, an image, can be re-interpreted and re-expanded as a code with another universe of interpretation. On encoding, Friedrich Kittler wrote in Code, or How You Can Write Something Differently:” “Condensed into telegraphic style, Turing’s statement thus reads: Whether everything in the world can be encoded is written in the stars. The fact that computers, since they too run on codes, can decipher alien codes is seemingly guaranteed from the outset. For the past three- and- a- half millennia, alphabets have been the prototype of everything that is discrete. But it has by no means been proven that physics, despite its quantum theory, is to be computed solely as a quantity of particles and not as a layering of waves. And the question remains whether it is possible to model as codes, down to syntax and semantics, all the languages that make us human and from which our alphabet once emerged in the land of the Greeks.”

Hare. time by French, 2016. Printed flashcard (Dojkinja). Photo Aad Hoogendoorn.

I feel like there’s an inherent tension between the way you work out your ideas materially even though they are conceived digitally. You actually brought up a really interesting question in that vein: “What are the implications of it when you enter it into reality?” You’re clearly invested in interpretation and translation (literally and thematically) and then reinterpreting and re-presenting it. What is it about information that you feel compelled to obfuscate it to then only re-present it?

It’s a challenge when being invested in the functions and outputs of programs that occur solely on a computer screen, and reconciling that with the desire to be able to manifest and explain it without resorting to replicating or illustrating. When this distributed semantic output of systems of interpretation enters an art context, it should be manifested in as blunt and low-cost manner as possible, rather than ask it to angle for validity as an art object through refined material production and qualities. Perhaps ‘an instantiation’ is the way that I currently think about it, where the work becomes an extended instance of an intuitive contact and work with a system, and the materialisation of it is another layer of interpretation. The Walking Anemone (Hedgehog, Sock) pieces, which used a neural network to revive a defunct Croatian hedgehog caretaker’s blog with lines from The Idiot, for example, created numbered nodes in space that corresponded to a key that added another layer to the exhibition floor plan, and a nonlinear corpus.

“What on earth did it all mean? The most disturbing feature was the hedgehog. What was the symbolic signification of a hedgehog? What did they understand by it? What underlay it? Was it a cryptic message?

Poor General Epanchin “put his foot in it” by answering the above questions in his own way. He said there was no cryptic message at all. As for the hedgehog, it was just a hedgehog, which meant nothing–unless, indeed, it was a pledge of friendship,–the sign of forgetting of offences and so on. At all events, it was a joke, and, of course, a most pardonable and innocent one.

We may as well remark that the general had guessed perfectly accurately.

The prince, returning home from the interview with Aglaya, had sat gloomy and depressed for half an hour. He was almost in despair when Colia arrived with the hedgehog.

Then the sky cleared in a moment. The prince seemed to arise from the dead; he asked Colia all about it, made him repeat the story over and over again, and laughed and shook hands with the boys in his delight.” (The Idiot, Fyodor Dostoyevsky)

8žFAfŠ = m3Nca, 2016. Node of walking anemone (hedgehog, sock)

Do you ever feel stumped when working? Where do you go to for inspiration?

I like to read, a lot — mainly fiction, theory and research papers. Usually, once I start reading something begins to click again.

What are some trippy AI learning systems we should know about? Any new apps that are super scary?

The continued descendants of encoding and computation into sentience — for example, Elon Musk’s Neuralink, Facebook’s mind-reading aspirations, and experiments on remotely controlling other life forms with human thought.

What is your favourite app/program to work with?

The command line.

Lastly, you’re a self-proclaimed technological pessimist with good reason. Can you get into that?

In general, my outlook is fairly dark, and technology is not an exception. While agnostic in a vacuum, new technologies are largely financed by the military-industrial complex, and instrumentalized by corporations to exert control over populations, trading convenience for surveillance.